Unsplash is a great Ghost built-in integration that allows you to quickly add an image to your posts, and I personally use it every time to add the Feature Image to all my articles (the big one below the title, before the content).

Unfortunately, out of the box the image from Unsplash is really big (2000px wide) and impacts on the page speed of the site since the browser will download the image first then continue with the rest of the page.

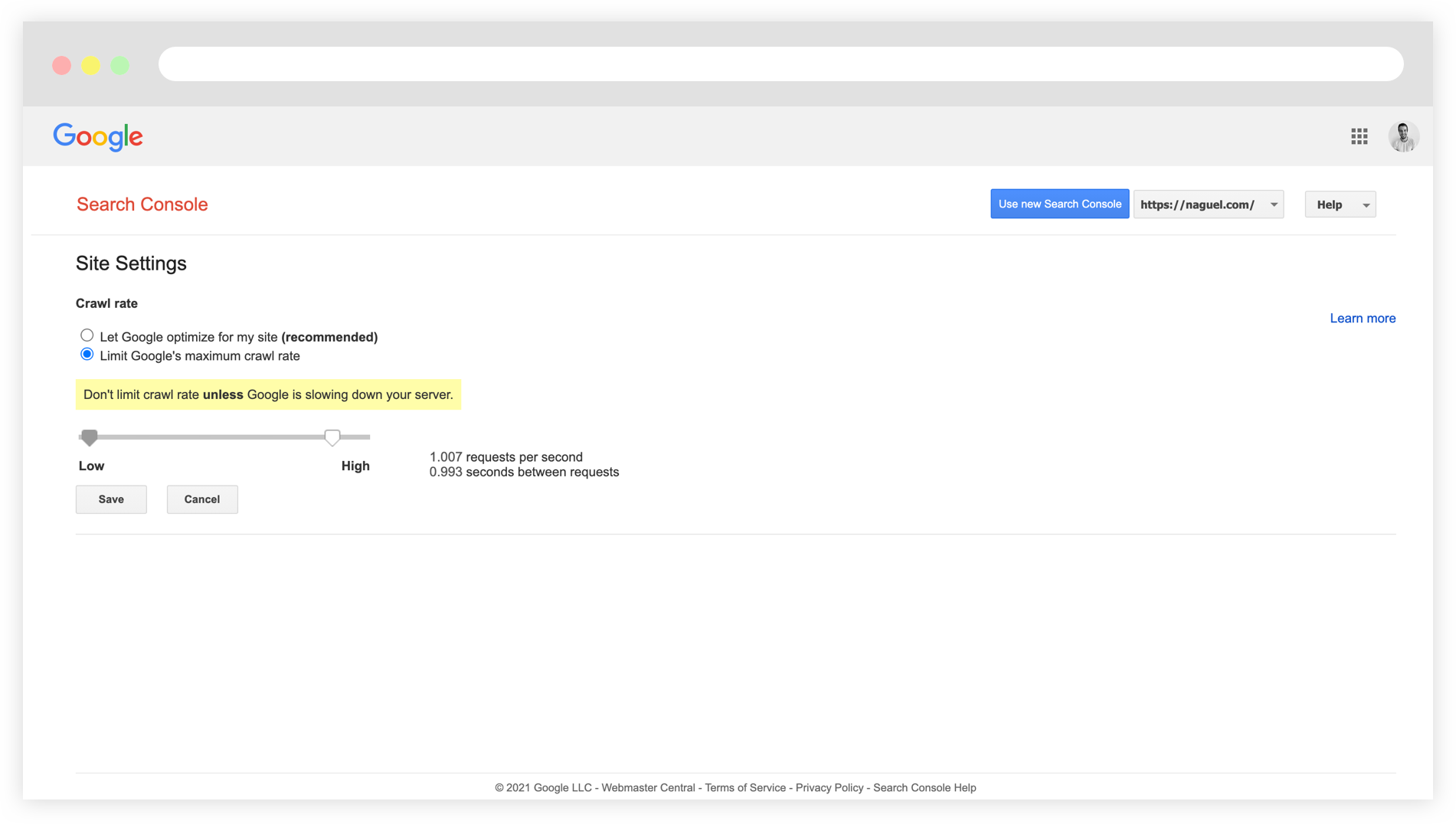

There's no much we can do about the image size as we can't follow the "How to use responsive images in Ghost themes" official guide because that only applies to images you manually upload and not those coming from third-parties...

Dynamic image sizes are not compatible with externally hosted images. If you insert images from Unsplash or you store your image files on a third party storage adapter then the image url returned will be determined by the external source.

...but with a little bit of HTML and JavaScript we can lazy load them to prioritize the content over the image download.

Usually, the Feature Image in the Ghost theme will look something like:

<img src="{{feature_image}}" />This is a classic img tag with the src containing the URL of the image. But we need to do some changes here first before moving to the JS side of this technique.

In the src we are going to put a placeholder image to avoid having a broken image while the rest of the page loads, we are going to move the actual image to the data-src attribute, and finally we'll add a new class to the element.

<img src="{{asset "images/placeholder.png"}}" data-src="{{feature_image}}" class="lazyload" />

The placeholder image should be a small image in terms of weight. I'm using an image with a solid colour of 183 bytes so I can "reserve" the space of the final Feature Image to avoid "jumps" in the browser while everything loads.

Finally, the JS is quite simple. We need to wait for the window to be loaded, get all the img elements with the lazyload class, and replace the src with what's on the data-src so we trigger the actual image download.

window.addEventListener('load', (event) => {

let images = document.getElementsByClassName('lazyload');

for (let i = 0; i < images.length; i++) {

images[i].src = images[i].dataset.src;

}

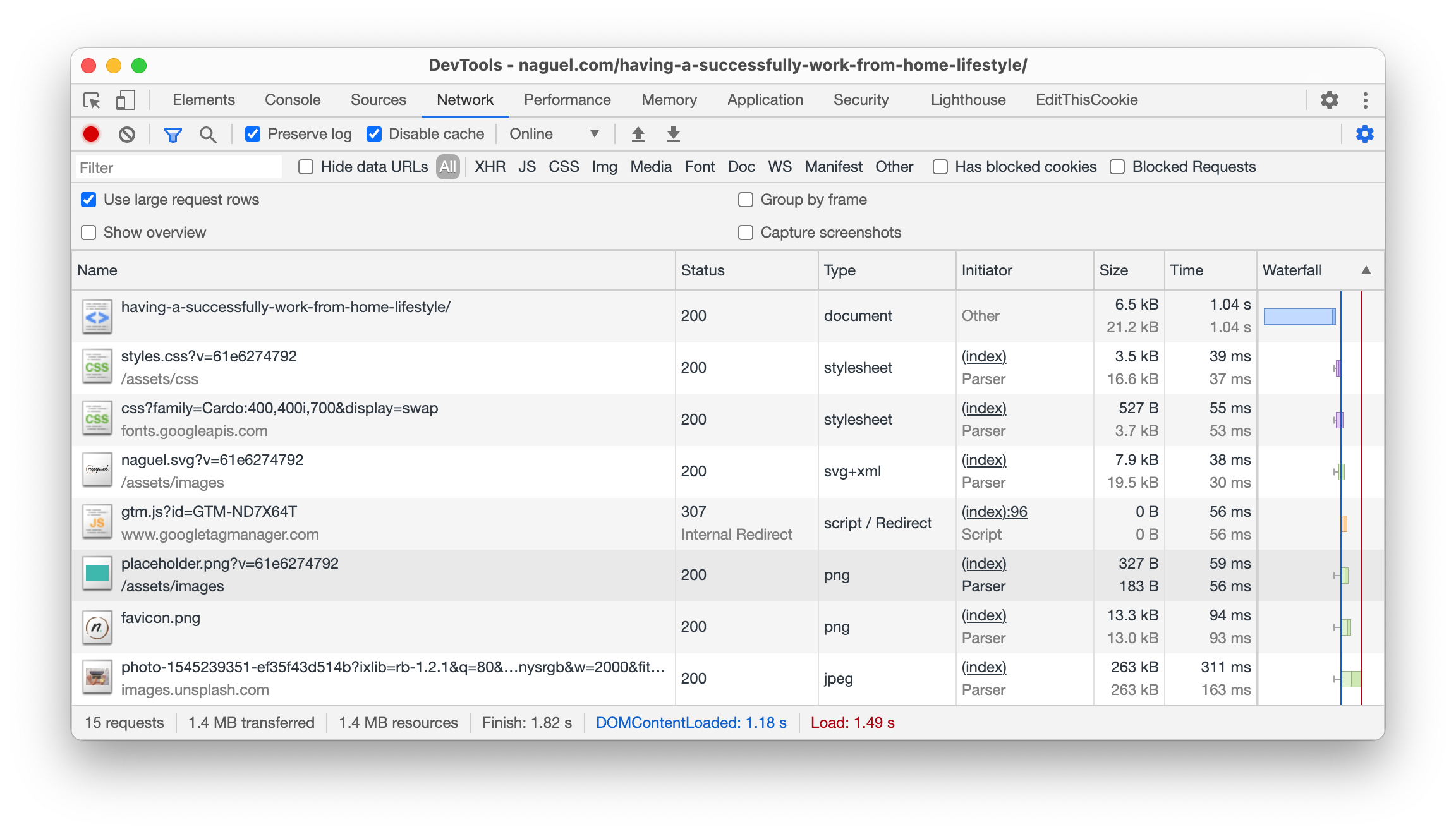

});With this in place we should see that the content is prioritized over the Unsplash image, and if we are using a placeholder we should see that first in the "Network" tab of our browser's DevTools, with the actual image loading later.