As weird as it sounds it could happen that Google hits your site at a pace your server won't be able to handle, causing page speed issues and a potential site down related to a high CPU usage and too many MySQL connections.

Google claims to be smart enough to be able to self-regulate the crawl rate at which it hits a site (they said that they have "sophisticated algorithms to determine the optimal crawl speed for a site"), but that's not true all the time.

If you can contact your hosting provider, they might be able to tell you the number of requests the bots are making (where around 20000 in the last six hours could be enough to cause issues) and the logs for those calls.

185.93.229.3 - - [23/Sep/2021:05:32:55 +0000] "GET /about/ HTTP/1.0" 200 20702 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"Make sure that the IP you are seeing on the logs are really from Google not being smart about the crawl rate, and not a DDoS attack in disguise.

If you are certain about Googlebot being the one causing the problems with your server, you need to adjust the crawl rate first, and then block Google, temporarily, while you wait for the new crawl rate to take effect.

Adjust the crawl rate using Google Search Console

If you are thinking about controlling the crawl rate using the Crawl-delay directive on your robots.txt file, forget it. Google ignores that.

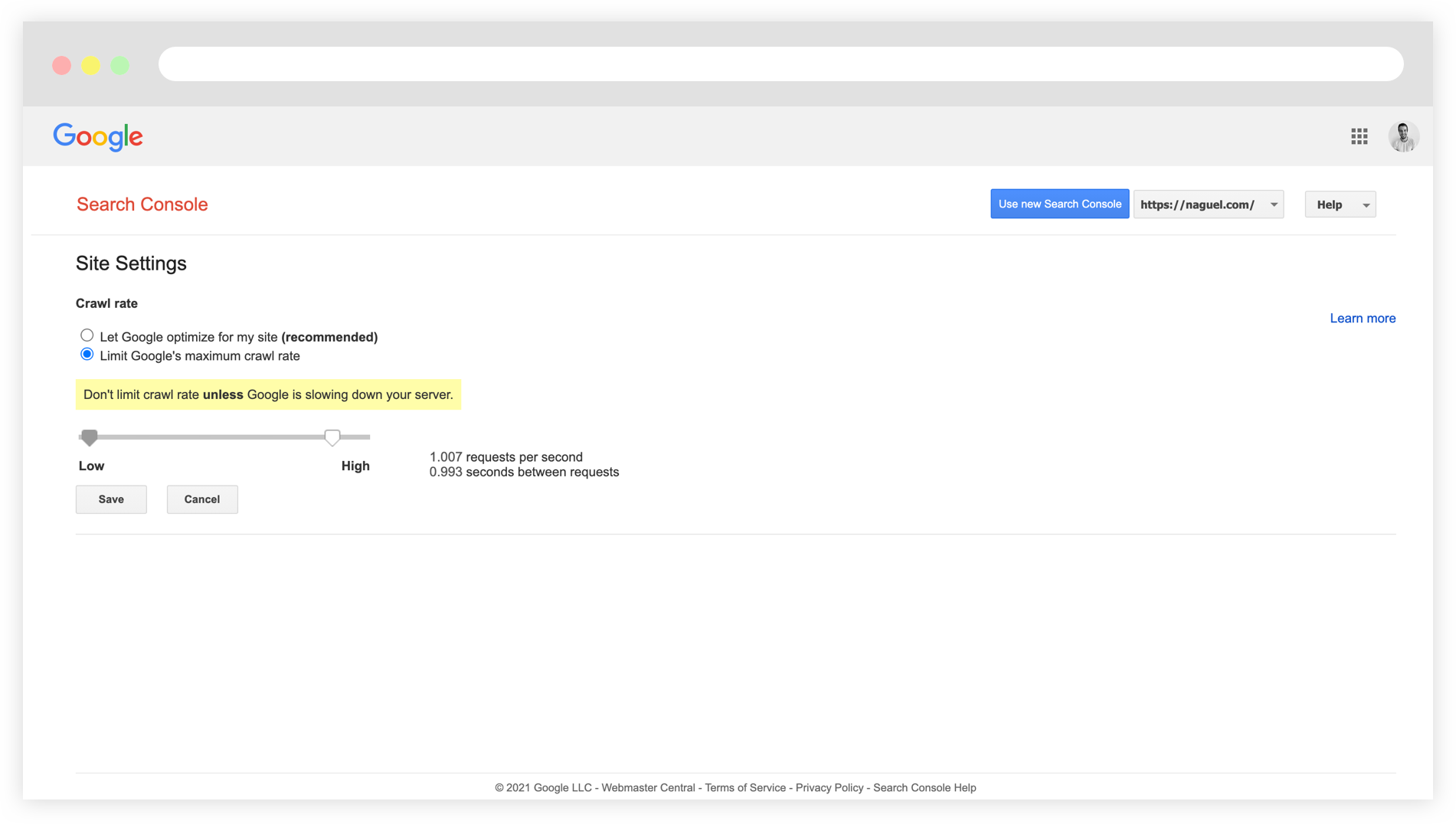

You need to use what's remaining of the old Google Search Console, not the new fancy one, here https://www.google.com/webmasters/tools/settings.

Selecting the “Limit Google's maximum crawl rate“ option will display a slider for you to configure a lower crawl rate.

How low? I can't tell you exactly, since that would depend on your site and server capacity, but as reference I remember configuring it as follow for a Magento project living on a size XL AWS instance.

1 requests per second

1 seconds between requests

You can try something like that, or even lower, maybe at 0.5 requests per second.

Block Googlebot temporary for a few days

So, here's the catch: the new crawl rate setting is not immediately applied.

After saving the new crawl rate you'll get a message saying that "Your changes were successfully saved and will remain in effect until Sep 17, 2021" but it's not until you open up the confirmation email that you'll read that "within a day or two, Google crawlers will change crawling to the maximum rate that you set".

This means that your site will keep on getting hit at the same pace for a day or two, so the solution is to block Google for that period of time while you wait for the new crawl rate to get into effect.

Remember to lift the ban on Google after 2 days, and monitor for the following days that Google isn’t affecting the site performance again (so confirming the new crawl rate is working, otherwise you might need to bring it down a little more).